- #EXPORT CSV FROM ELASTICSEARCH HOW TO#

- #EXPORT CSV FROM ELASTICSEARCH CODE#

- #EXPORT CSV FROM ELASTICSEARCH DOWNLOAD#

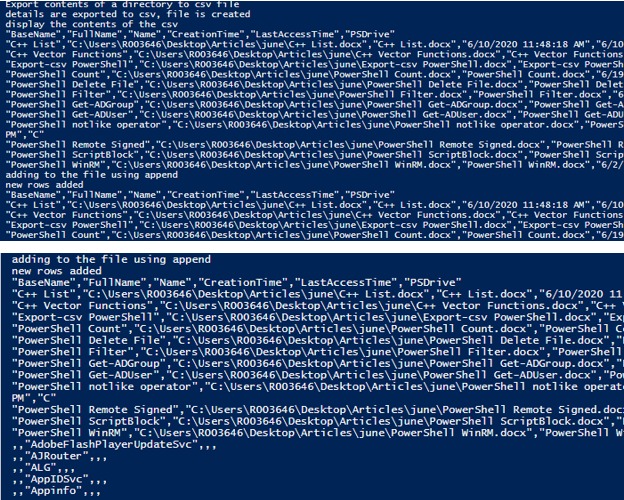

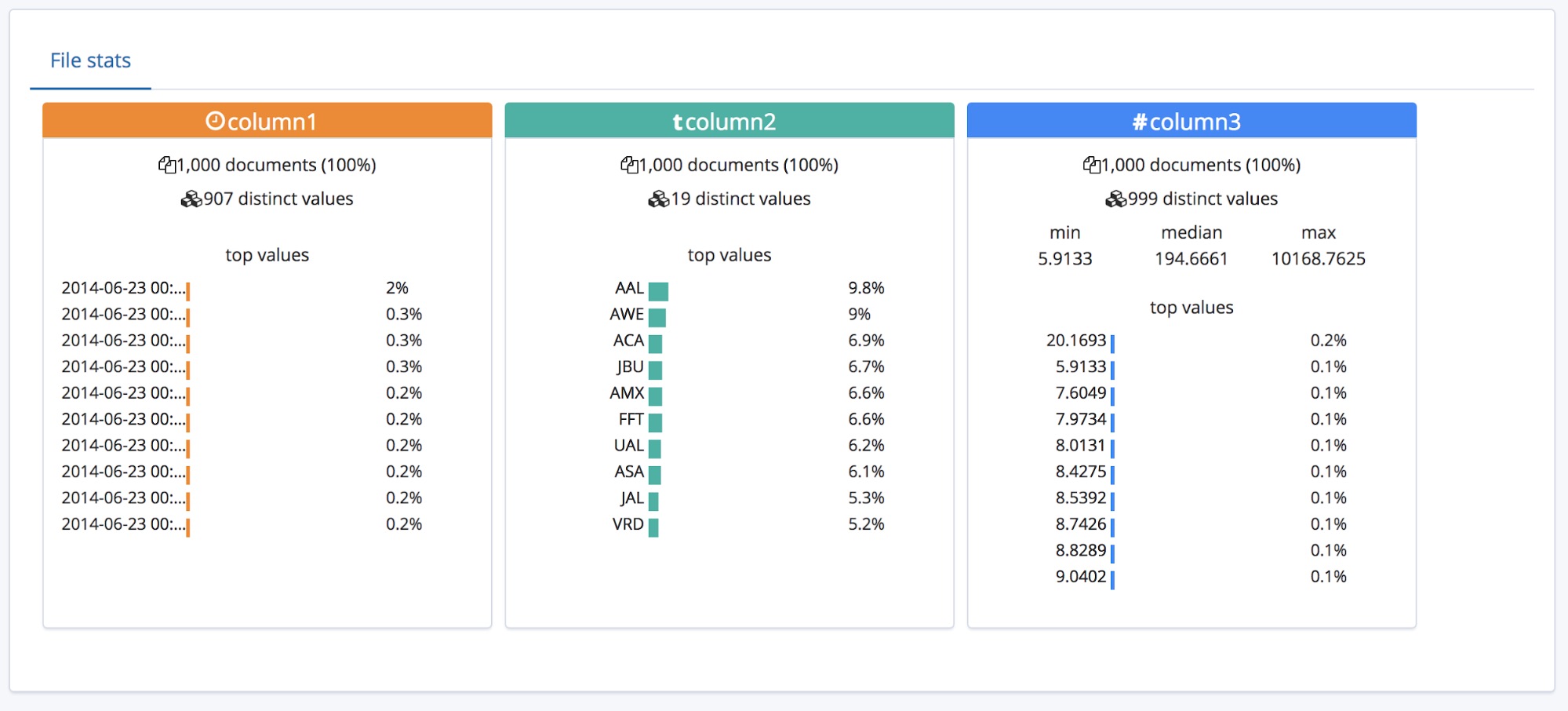

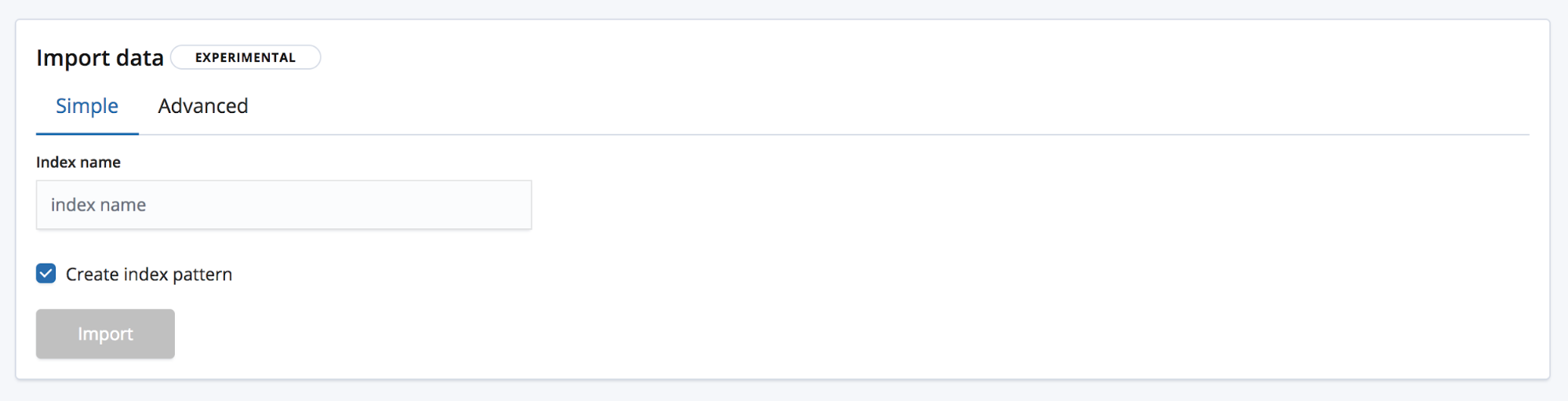

Don't forget to check the Download attribute checkbox so that the result of the automation chain is downloaded on the client machine. The only step left is to create a button ( User Action -> New Action Feature) that will trigger this operation chain. Step 3: Creating the Button to Generate the CSV File html extension in the filename and choose text/xml as the mimetype. To download a file in an HTML format instead of CSV, use the.See the page NXQL for more information about Nuxeo SQL-like query language. You can define the query to filter the content repository and display only the documents that meet the query conditions. In this example, we query all the documents in the repository.The chain should look like this: - Context.FetchDocument We are going to create an automation chain to query the Nuxeo repository and render the query result as a template-based CSV file. The goal of this tutorial is to show you a quick and simple method of importing a CSV file into your Elasticsearch cluster using the Kibana dashboard. Works with ElasticSearch 6+ (OpenSearch works too) and makes use of ElasticSearch's Scroll API and Go's concurrency possibilities to work as fast as possible. The element dc:subjects is a list, so it has to be listed as one using the script. Export Data from ElasticSearch to CSV by Raw or Lucene Query (e.g. For each of these documents, we will need to retrieve some of its metadata and display them. The template will enable to handle a list of documents.

Since those column names never change, type them as we want them to appear in the final document: Title, Description, Creator, Creation Date We are having problem with CSV export of 'Saved search' through our Dashboard, it got hanged if size of CSV gets more than 150 MB nevertheless we are able to export csv when we are exporting saved search from discover (not a dashboard widget). The first line of the template will represent the column of the generated CSV file. Now we are ready to fill in the template.

#EXPORT CSV FROM ELASTICSEARCH CODE#

Quotechar='|', quoting=csv.QUOTE_MINIMAL)įilewriter.writerow() #change the column labels hereĬol1 = hit.decode('utf-8') #replace these nested key names with your ownĬould someone fix this code up for it to work please, been spending hours on this to make it work. Note: I am not looking to just export all the contents of ElasticSearch cluster into csv format. With open('outputfile.tsv', 'wb') as csvfile:įilewriter = csv.writer(csvfile, delimiter='\t', # we use TAB delimited, to handle cases where freeform text may have a comma By exporting the data from the PostgreSQL tables to CSV files, creating an Elasticsearch index, and importing the data into the index, you can easily. # then open a csv file, and loop through the results, writing to the csv Step 1: Export data from PostgreSQL tables.

# this returns up to 100 rows, adjust to your needs

#EXPORT CSV FROM ELASTICSEARCH HOW TO#

I have been looking everywhere to do this but all my attemps on this is failing on how to do it.Įs = elasticsearch.Elasticsearch() I would like to export my elastic search data that is being returned from my query to a CSV file.

0 kommentar(er)

0 kommentar(er)